How To Build Manga with AI

And Make Lots of New Friends in China!

On July 21st, Lore Machine dropped its first manga publication Into the Unknown to hundreds of fans at Bilibili World in Shanghai. The partner-driven work was brought to life alongside HP’s gaming laptop OMEN and Anonymous Content’s The Lab. The story focuses on the inter-dimensional journey of a gamer dude named Ying, who falls through a vortex into a subterranean landscape inhabited by mind puzzles, new pals, and a colossal robotic spider. All 36 pages of the audio-visual journey were produced by humans and AI.

You can read the English version here.

The following is the story behind the story…

Foundational Text

Like all Lore Machine projects, it started with text.

First it was an actual text from our friends at Anonymous Content’s The Lab. The Lab is constantly on the lookout for new production tools to help articulate the mission of their brand partners. As a proof-of-concept, we ran a few of The Lab’s film scripts through Lore Machine’s Story Visualization System. One of these experiments caught the eye of HP. The information technology company’s gaming division OMEN was interested in building a generative storyscape from the ground up.

To kick the project into gear, we needed a foundational text. Our genre of choice was sci-fi manga. So The Lab invited screenwriter and all-around badass Phil Gelatt to the party. If you haven’t experienced Phil’s stories in Love Death & Robots or The Spine of Night, what are you even doing reading this post?

We decided that a script was the best format for Phil’s story. A script is more structured than prose and makes it easier for Lore Machine’s System to identify key story attributes like characters, locations and action. We made three story suggestions:

Psychedelic: AI image generators tend to hallucinate in unexpected ways. These glitches are almost always trippy. For more perspective, check out Andy Weisman’s musings on the topic in Hallucinations as a feature, not a bug. We aimed to combine these unexpected moments with vibrant color to push the manga form into uncharted territory.

Epic: To ensure an eye-melting experience, we needed as much scenery as possible.

Action-packed: Volumes of manga tend to play out like an action sequence over a condensed period of time. We aim to punch readers in the face.

The result was a 20-page ripper of a script. Spoiler alert: Ying is a gamer dude down on his luck. He’s stuck on this one level. Exhausted one night while deep in gameplay, he hears a voice. Next thing, he’s being sucked through a psychedelic wormhole. He lands on the cold hard ground of a subterranean nightmarescape patrolled by a massive robo-spider. With the help of new friends and his OMEN gaming laptop, Ying defeats the spider. The victory unlocks a labyrinth of infinite complexity.

Text Synthesis

We ran Phil’s script through Lore Machine’s Natural Language Processing model to identify key themes, locations, characters and even vibes. Some linguistic edge cases were addressed using off-the-shelf tools from the Natural Language Toolkit. The result was a wealth of story data that we used as a compass for navigating the script-to-manga maze.

Instrumental to this adaptation effort was Marvel writer B. Earl. Each script scene was split into dialogue and action. The dialogue was plotted out in-narrative sequence on an empty storyboard. The raw action data was then ready to prompt image generation.

Stylistic Configuration

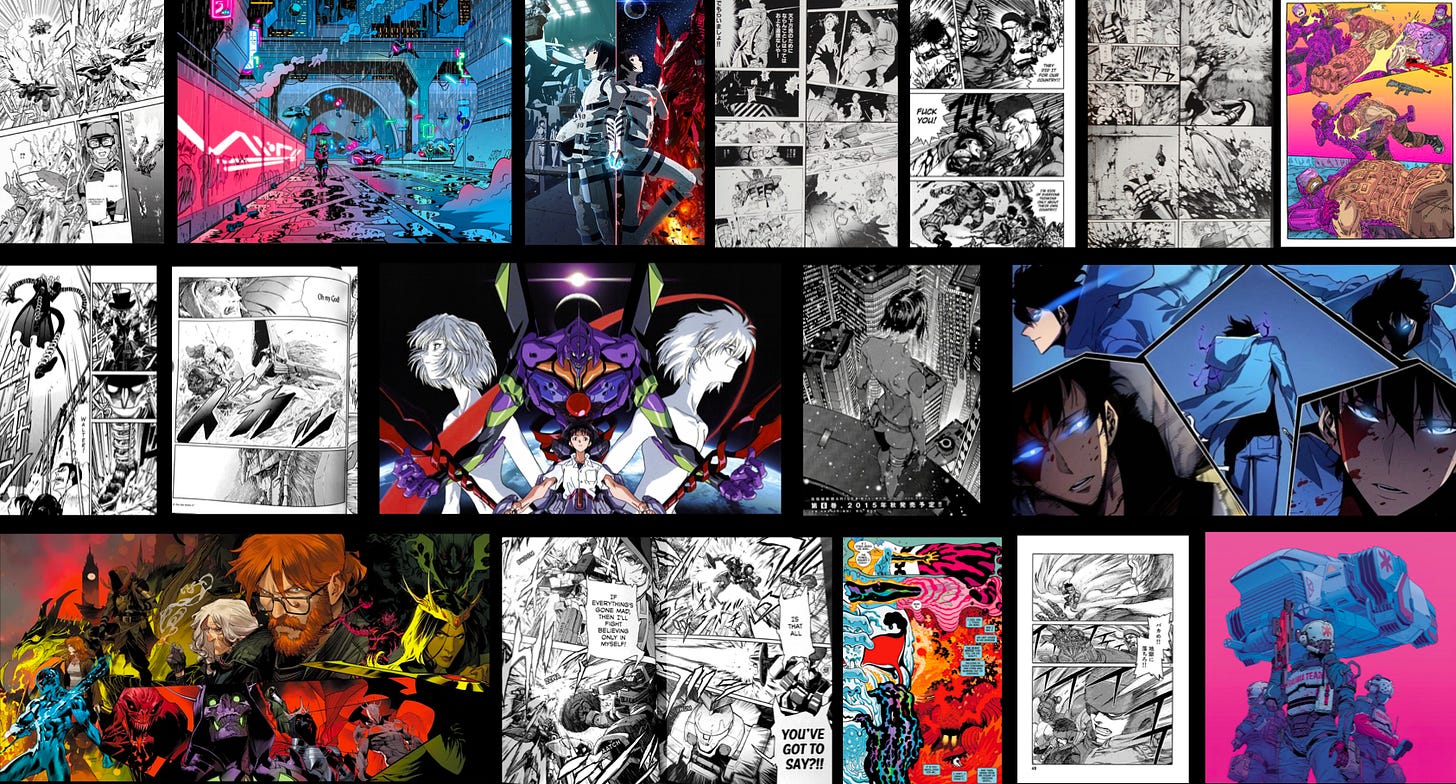

Next, we needed to establish a consistent style that could permeate the color palettes, shapes, characters, and layout of the graphical experience at story scale. The inspiration board quickly filled with thousands of found images.

It’s one thing to say psychedelic neo-neon organic cyberpunk manga. It’s another to uphold a stable expression of aspirational design traditions throughout a reading experience. To unite these aesthetic fragments, we called Lore Machine pal and synthographer Jumoke Fernandez. Here, she describes her approach:

We opted for a complete color immersion that encapsulates the intricate detailing of manga while embracing the vibrant hues and polished characteristic of anime. Stylistically, our intent was to pay homage to timeless Shonen classics like Gintama and Sket Dance.

We were hellbent on using our AI tools to establish a fresh hybrid style that might forge a sequential art experience that contributed meaningfully to the comic canon.

Characters

With style in place, it was time to train our three key characters and robo-spider. Jumoke outlines the approach:

We commenced by generating rudimentary 3D models for each character, subsequently employed in Stable Diffusion as a reference for the Controlnet (utilizing Open Pose and Canny Edges). This method, coupled with effective prompts, empowered us to originate entirely unique characters without initial LORA training.

We built pose maps for our character set, each synchronized with actions synthesized from the foundational text.

Final Image Composition

Each character and prop was then lovingly transposed on to a range of locations. We visualized four distinct worlds:

Present Day: The reality we know, with its predictable lighting and familiar objects.

The Other Side: A world beyond the space-time continuum that obeys no law.

In-Game: Digital-in-digital experiences bring viewers into the heart of play.

Flashbacks: For story clarity, memories needed their own distinct location attributes.

The composability of each final image made it possible for our team to achieve one of the most coveted attributes in the generative storytelling space - consistency. The style, locations, props and characters’ physical and costume features remain perfectly the same across 178 final images 😅

Product Visualization

Into the Unknown marked Lore Machine’s first foray into generative product integration. To this end, we rendered the HP OMEN line of laptops, mouses, headphones and gaming chair in the chosen stylistic configuration, while maintaining product shape and color attributes, ergonomics, and logo legibility.

To do so, we collected hundreds of high-resolution product shots from the OMEN team. These were then run through an image generator trained on our style, then re-upres’d using Replicate’s formidable Real-ESRGAN.

Paneling & Layout

We turned to our pal and design legend Dersu Rhodes to sculpt the generative image library into a dynamic reading experience. Dersu combined traditional, emerging and never-been-done-before manga paneling styles into a hard-edged, fast-paced spatial structure in which our story could thrive. He also constructed tactile layers to give digital readers that printed matter feel.

Soundscape

Lastly, we turned to composer John McSwain to build a musical accompaniment to the graphical experience. We wanted an atmospheric co-pilot for the reader that complemented the multi-world journey without taking center stage. John focused on a steady rhythm and layered synths to propel the action forward with the aim of creating a tension readers could enjoy. The track was then engineered to loop seamlessly to maximize focus and eliminate distraction.

The Future of Generative Manga

Ultimately, Into the Unknown is a grand experiment in elevating storytelling using applied AI. We aimed to put our technology to the test and contribute to emerging workflows that might push manga into unexpected new directions. The tools we used gave Lore Machine a lift on a number of fronts:

There’s no denying the speed of production. We could experiment, gain stakeholder consensus on stylistic configurations, and generate character features, action and environments at sonic speeds. The entire experience was built in under a month.

We were able to achieve big color, moving past the traditional black-and-white of most manga.

We achieved rich environments and dynamic motion that contributed to the energy of the story with relative ease.

Maybe most importantly, we built a set of story blocks that can now be reconfigured and upgraded for subsequent volumes at exponentially faster rates, giving us more time for more design innovation.

The production gave us an expansive sense of possibility in every direction. Taking sound as an example - we’re looking forward to building an even more intuitive generative soundscape, powered by tools like MusicLM.

The most transformative opportunities lie in the still-nascent but very much inbound capability of AI tools to morph one format into another. The below 3D render was built in ten minutes from a single 2D image of our protagonist Ying using AI-powered 3d model maker Kaedim.

As multimodal generators continue to evolve, a music sample and a descriptive line of text can conjure a video; a set of 2D images can become an a fully realized animated scene. From there, a feature film feels close at-hand.

However it all goes down, one thing is certain: the future of generative storytelling will be a deeply human process.

Credits

Into the Unknown is a multimedia experience brought to life by

Lore Machine’s Story Visualization System and a group of extraordinary human beings:

Original Script - Phil Gelatt

Synthography - Jumoke Fernandez

Comic Script - B.Earl

Layout & Graphic Design - Dersu Rhodes

Project Management - Jonathan Montaos

Soundscape Composition - John McSwain

Producer - Ella Min Chi

Special Thanks to:

Vikrant Batra and Chris Bohrer of HP

And of course, a big thank you the OMEN team:

Grace Dong

Sophia Dong

Lei Pan

Yi Teng Liu

Xuanpei Hong

If you’re just joining in: Lore Machine is an AI-Collaborative Story Visualization System & Studio.

Lore Machine’s System is comprised of four interoperable pieces of software, geared to help users transform textual stories into multimedia experiences.

Lore Machine’s Studio trains emerging AI tools and a network of 51 prompt engineers to help our partners bring their visions to life!

If you’ve got some lore, we are your machine - sup@loremachine.ai.